January 15 - 18 2024: MISTI MIT Media Lab x Smart Living Lab Workshop

The University of Fribourg is collaborating with the MIT Media Lab to host a series of talks and hands-on sessions during this workshop related to sensing and understanding environments, designing systems to study sleep and its physiological effects, as well as human rehabilitation and augmentation. This workshop will open up collaboration opportunities in the field of built environments and human-computer interaction.

Prof. Joe Paradiso, Prof. Denis Lalanne, Patrick Chwalek, Sailin Zhong

Registration

Please enroll through the google form: https://forms.gle/JjsAHSU5xjNRH7Zb6

Contact Sailin Zhong sailin.zhong@unifr.ch and Patrick Chwalek chwalek@mit.edu, if you have any further questions.

Location

Smart Living Lab: Pass. du Cardinal 13b, 1700 Fribourg

UniFR Learning Lab: A201, Bvd Pérolles 90, 1700 Fribourg

Seminar Program

The seminar will happen in person From January 15th 2024 to January 18th 2024 in the Smart Living Lab and the Learning Lab of the University of Fribourg. The workshop will start with talks by the keynotes speakers/workshop organizers and core topic researchers, followed by two-day hackathons expending on these topics. We will communicate the confirmation of enrollment to the perspective participants who register their interests. The schedule might slightly change before the New Year.

| Date | Event | Time | Details | Location |

|---|---|---|---|---|

| Jan 15 | Warm-up and Learning | 8:30 - 9:00 am | Registration and Welcome Coffee with Light Breakfast. | Smart Living Lab |

| 9:00 - 10:30 am | [Keynote] Prof. Joseph A. Paradiso: An Introduction to Sensing, Hardware, and Visualization. | |||

| 10:30 - 11:30 am | Participants’ Introduction (each introduction lasting 15 minutes). | |||

| 12:00 - 1:00 pm | Lunch Break | |||

| 1:00 - 2:00 pm | Smart Living Lab tour: Blue Hall – Climatic Pavilion – NeighborHub – Climatic Chambers | |||

| 2:00 - 3:00 pm | [Talk] Data Collection for Environmental and Personal Health | |||

| 3:00 - 3:45 pm | [Talk] The Sleeping Mind | |||

| 3:45 - 4:00 pm | Break | |||

| 4:00 - 5:00 pm | [Talk] Rehabilitation and Augmentation | |||

| 5:00 - 5:30 pm | Q&A on the research tools and discuss on initial project ideas | |||

| Jan 16 | Diving Deeper into Sensing | 10:00 - 11:00 am | [Talk] Prof. Dusan Licina (Continuous Sensing: The Next Frontier of the Built Environment) | Smart Living Lab |

| 11:00 - 12:00 am | [Keynote] Prof. Denis Lalanne (Multimodal Interactions) | |||

| 12:00 am - 1:00 pm | Lunch Break | |||

| 1:00 - 2:00 pm | [Talk] Data, Interfaces, and Us | |||

| 2:00 - 2:45 pm | [Talk] Crafting Interfaces to Optimize Sleep | |||

| 2:45 - 3:00 pm | Break | |||

| 3:00 - 4:00 pm | [Talk] Rehabilitation and Augmentation | |||

| 4:00 - 5:00 pm | Discussions and BYOD: Bring Your Own Devices | |||

| Jan 17 | Hackathon Challenge Day 1 | 9:00 am - 5:00 pm | Engage in coding, designing, prototyping. Develop solutions, ideate on cross-collaboration, introductory workshops | UniFR Learning Lab |

| 12:00 - 1:00 pm | Lunch Break | |||

| Jan 18 | Hackathon Challenge Day 2 | 9:00 am - 12 pm | Engage in coding, designing, prototyping. Develop solutions and ideate on cross-collaboration. | UniFR Learning Lab |

| 12:00 - 1:30 pm | Lunch Break | |||

| 1:30 - 3:30 pm | Presentation of ideas/prototypes | |||

| Jan 18 (Easter Eggs) | A Sonic Tour Through Time and Technology Featuring the Paradiso Synthesizer | 8:00 - 9:15 pm | [Talk] A Swiss Born Synth Rig by Prof. Joseph A. Paradiso (Private tour @SMEM is available at 6:30pm. Please contact Sailin/Patrick if you would like to join as only up to 15 people can join the tour). | Swiss Museum for Electronic Music Instruments (SMEM) @Pass. du Cardinal 1, 1700 Fribourg |

Keynotes

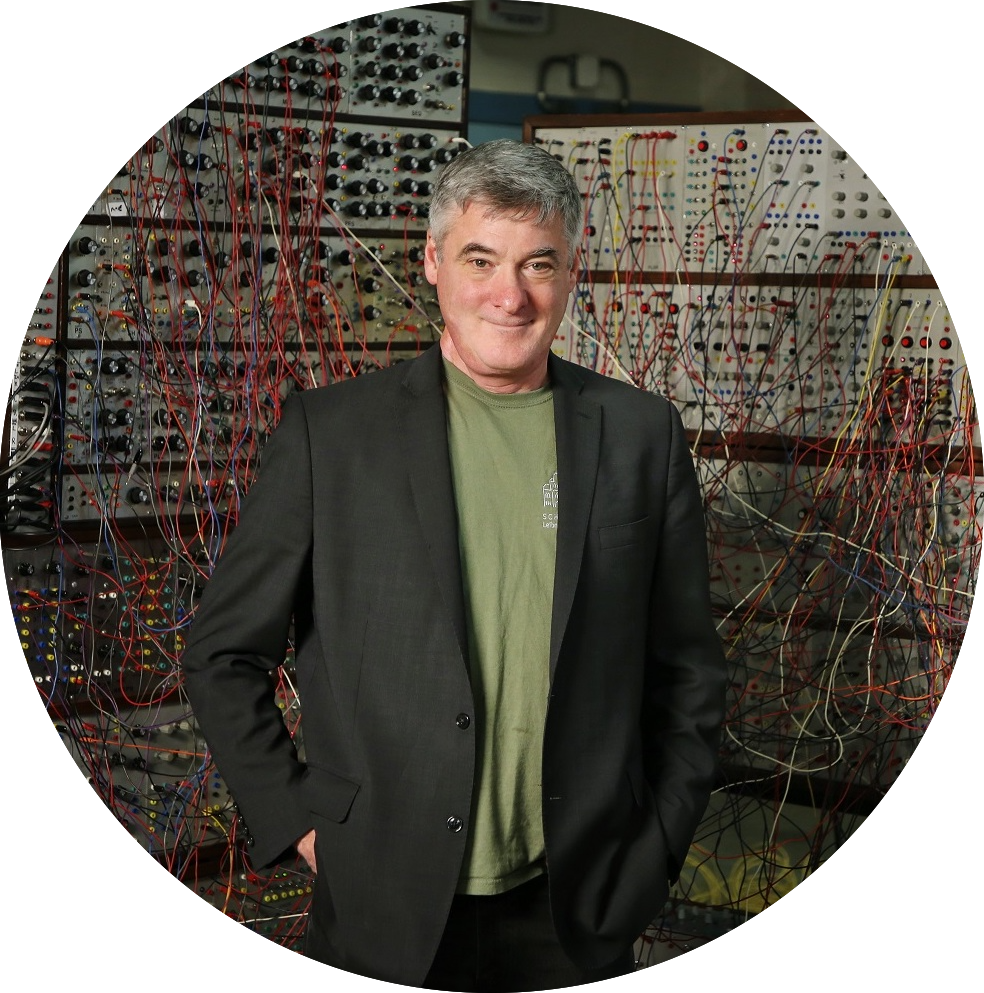

Prof Joe Paradiso

MIT Media Lab

An Introduction to Sensing, Hardware, and Visualization

This talk will show how diverse and distributed sensors augment and mediate human experience, interaction, and perception, and the development of new sensing modalities and enabling technologies that create new forms of interactive experience and expression. The presented research encompasses the development and application of various types of sensor substrates and networks, energy harvesting and power management, sensor representation in virtual and augmented reality, sensor-based inference, interactive systems and HCI, and the technical foundation of the Internet of Things. The presented work is highlighted in diverse application areas and is applied across wide scales, spanning wearable systems, smart buildings, environmental sensing, and space missions.

Joe Paradiso is the Alexander W. Dreyfoos (1954) Professor at MIT's Program in Media Arts and Sciences. He directs the MIT Media Lab's Responsive Environments Group, which explores how sensor networks augment and mediate human experience, interaction and perception. He received a B.S. in electrical engineering and physics summa cum laude from Tufts University, and a Ph.D. in physics from MIT with Prof. Ulrich Becker in the Nobel Prize-winning group headed by Prof. Samuel C.C. Ting at the MIT Laboratory for Nuclear Science.

Paradiso's research focuses include ubiquitous computing, embedded systems, sensor networks, wearable and body area networks, energy harvesting and power management for embedded sensors, and interactive media. He also designed and built one of the world's largest modular synthesizers, and has designed MIDI systems for the musicians Pat Metheny and Lyle Mays. The synthesizer currently streams live-generated audio over the internet.

Website: http://www.media.mit.edu/~joep/

Prof Denis Lalanne

University of Fribourg

Multimodal Interactions

Different interaction modalities and the combination (fusion and fission) of them create more effective technologies beyond interactions through the mouse, keyboard and touch. This talks covers tangible objects, gestural and natural languages as interfaces for interaction and how to apply the CARE and CASE design spaces for multimodality among other concepts.

Denis Lalanne is a full professor in the department of Informatics of the University of Fribourg and director of the Human-IST institute. He is also heading the "human-building interaction" group in the smart living lab and the Swiss representative at the IFIP TC13. After completing a PhD at the Swiss Federal Institute of Technology (EPFL), a postDoc in the USER group in IBM Almaden research center, one year of teaching and research in the university of Avignon, and a great experience as a usability engineer in a swiss start-up, he joined the University of Fribourg.

Lalanne's research focuses include multimodal interfaces, information visualization, human-computer interaction, and human-building interaction.

Website: https://diuf.unifr.ch/people/lalanned/

Talks/Hackathon Topics

Environmental Sensing and Comfort

Jan 15 Afternoon Tour: Measuring Environmnent

Some intriguing urban theories suggest that certain features can enhance a city’s appeal to pedestrians. For example, having more green spaces and having more diversity of local amenities. But how much of a difference do these elements make, and to what extent? How do these theories intertwine and influence one another? This session explores the ongoing research into the relationships between pedestrian activities and urban forms.

Jan 16 Afternoon Session 1: Data Collection for Environmental and Personal Health

Building hardware for research can be tempting, but is it always necessary? In this talk, we will share our personal experience of designing and using custom sensing platforms for environmental and physiological data collection. We will also review the current landscape of open-source and commercial sensing solutions and offer some guidance on how to choose the best option for your research goals. The environment around us affects our health and perception, but how can we measure and understand this relationship? In this session, we will present our latest work on collecting and analyzing data from various sources, such as environmental sensors, physiological monitors, and user surveys.

About speakers

Patrick Chwalek (PhD Candidate, Responsive Environments, MIT Media Lab) is a PhD candidate in the Responsive Environments Group, where he develops and deploys sensors for real-world applications. He explores how environments influence people’s physical and mental states, and how comfort varies with engagement. He also creates devices to study wildlife in their natural habitats, such as Arctic birds, Patagonian bees, and Botswanan African Wild Dogs.

Website: https://patrickchwalek.com/

Sailin Zhong (PhD Candidate, Human-IST Institute, University of Fribourg)'s research interest lies in the conjunction of the human perception of comfort and interaction design, leveraging environmental and physiological sensing. She conducts interdisciplinary research spanning architecture and Human-Computer Interaction, including city-scale 3D data visualization as well as interaction and interface design for Indoor Environment Quality.

Website: https://www.sailinzhong.net/

Kanaha Shoji (PhD Candidate, ETHOS (Engineering and Technology for Human-Oriented Sustainability) Lab, EPFL Fribourg) enjoys traveling, exploring new art and architecture, and learning the differences in various cities. That curiosity brought her to ETHOS at Smart Living Lab, where she explores the relationships between humans and the built environment. Her primary focus is walkability in cities.

Website: https://www.linkedin.com/in/shojik

Interaction with Data

Jan 16 Afternoon Session 1: Data, Interfaces, and Us

This talk explores the challenges and opportunities of designing user interfaces for environmental and physiological sensing. We focus on how users perceive and interact with real-time data about their comfort and well-being in the built environment. We also discuss the importance of creating user interfaces that are engaging and accessible, and how they can help users gain insights on how their environment affects them. Sailin Zhong will share more on interfaces for environmental sensing in this session as well.

About the speaker

Cathy M. Fang (PhD Candidate, Fluid Interfaces, MIT Media Lab) is a PhD Student at MIT Media Lab's Fluid Interfaces Group. Her research interests are multi-modal input and interactions for mixed reality, spatial and context-aware computing, wearable interoceptive intervention, computational cognition and perception. Previously, she has worked at Microsoft, Apple, IDEO, and Magic Leap. She holds a bachelor's degree with honors from Carnegie Mellon University in Mechanical Engineering and Human-Computer Interaction.

Website: https://cathy-fang.com/about.html

Sleep Optimization

Jan 15 Afternoon Session 2: The Sleeping Mind

Sleep plays a vital role in optimal cognitive function, learning, and wellbeing. Yet in our 24/7 society, many fail to prioritize sleep. This talk will provide an overview of the research on sleep physiology, the role of sleep, impact of sleep deprivation, and technologies for interfacing with the sleeping/dreaming mind.

Jan 16 Afternoon Session 2: Crafting Interfaces to Optimize Sleep

As technology advances, new opportunities arise to not only track sleep but to actively build interfaces improving and leveraging it. This talk will explore emerging areas of interface design related to sleep; Interfaces for Sleep, Interfaces from Sleep. This would include designing devices, environments, and interventions to optimize sleep quality, using state of dreams for creativity, and using sleep data guide the design of interfaces.

About the speaker

Abhinandan Jain (PhD Candidate, Fluid Interfaces, MIT Media Lab) is a PhD student in the Fluid Interfaces Group at the MIT Media Lab. Abhi’s research explores technologies that digitally modulate aspects of human physiology and psychology. His research demonstrates non-invasive interfaces that modulate physiological dynamics as a way to influence processes such as sleep and emotions, thereby subtly influencing the conscious experiences.

Website: https://abyjain.github.io/

Rehabiliation and Augmentation

Jan 15 Afternoon Session 3: Rehabiliation and Augmentation (tbd title)

This talk explores the challenges of designing technologies for blind and low-vision (BLV) people as well as people with neuropsychological problems. In particular, we discuss the challenges in designing interfaces without having a full representation of what the user perceives. We additionally review examples of how the user’s abilities are taken into account and discuss how the BLV research field may be moving from a one-size fits all approach towards personalisable/adaptable solutions.

Jan 16 Afternoon Session 3: Rehabiliation and Augmentation (tbd title)

Rehabilitation/Readaptation sessions play a vital role in improving the lives of people with impairments (e.g., mobility, visual and cognitive). Whilst these sessions aim to maximize the use of the client’s residual functions, they are frequently perceived as uninteresting and tedious. In this talk, we offer examples of how rehabilitation tasks can be gamified as well as our personal experience in designing such tasks with a focus on low-vision people. We also discuss how these tasks can offer insights to rehabilitation therapists and how tasks can be tailored to each person’s needs.

About speakers

Sam Chin (PhD Candidate, Responsive Environments, MIT Media Lab) is a graduate student in the Responsive Environments Group at the MIT Media Lab. Her research explores how technology can be used to expand the boundaries of human perception and improve the way we experience the world around us. On one hand, she builds hardware and interfaces for sensory augmentation. On the other, she examines the neuroscience of how we learn and integrate senses.

Website: https://www.samch.in/

Yong-Joon Thoo (PhD Candidate, Human-IST Institute, University of Fribourg) is a PhD Candidate at the Human-IST Institute of the University of Fribourg. His research aims to improve the quality of life of blind and low-vision people by providing them with an augmented reality based platform to train their residual vision and develop adaptive strategies in an engaging manner.

Website: https://www.linkedin.com/in/yong-joon-thoo-4370b6156/